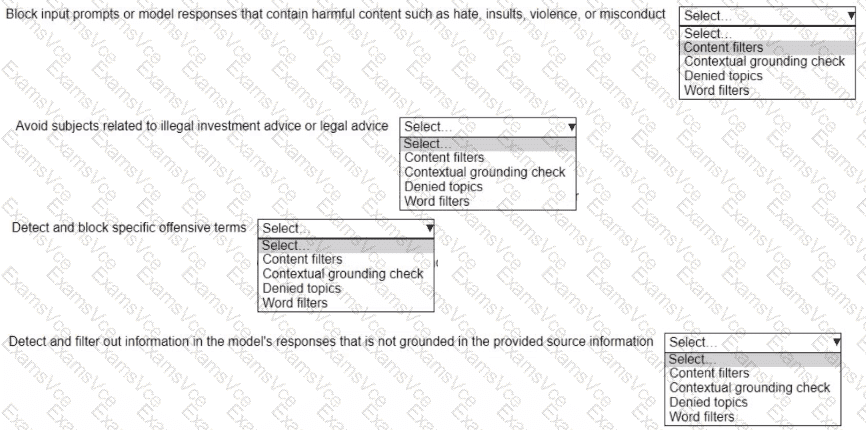

Block input prompts or model responses that contain harmful content such as hate, insults, violence, or misconduct:Content filters

Avoid subjects related to illegal investment advice or legal advice:Denied topics

Detect and block specific offensive terms:Word filters

Detect and filter out information in the model’s responses that is not grounded in the provided source information:Contextual grounding check

The company is using a generative AI model on Amazon Bedrock and needs to mitigate undesirable and potentially harmful content in the model’s responses. Amazon Bedrock provides several guardrail mechanisms, including content filters, denied topics, word filters, and contextual grounding checks, to ensure safe and accurate outputs. Each mitigation action in the hotspot aligns with a specific Bedrock filter policy, and each policy must be used exactly once.

Exact Extract from AWS AI Documents:

From the AWS Bedrock User Guide:

*"Amazon Bedrock guardrails provide mechanisms to control model outputs, including:

Content filters: Block harmful content such as hate speech, violence, or misconduct.

Denied topics: Prevent the model from generating responses on specific subjects, such as illegal activities or advice.

Word filters: Detect and block specific offensive or inappropriate terms.

Contextual grounding check: Ensure responses are grounded in the provided source information, filtering out ungrounded or hallucinated content."*(Source: AWS Bedrock User Guide, Guardrails for Responsible AI)

Detailed Explanation:

Block input prompts or model responses that contain harmful content such as hate, insults, violence, or misconduct: Content filtersContent filters in Amazon Bedrock are designed to detect and block harmful content, such as hate speech, insults, violence, or misconduct, ensuring the model’s outputs are safe and appropriate. This matches the first mitigation action.

Avoid subjects related to illegal investment advice or legal advice: Denied topicsDenied topics allow users to specify subjects the model should avoid, such as illegal investment advice or legal advice, which could have regulatory implications. This policy aligns with the second mitigation action.

Detect and block specific offensive terms: Word filtersWord filters enable the detection and blocking of specific offensive or inappropriate terms defined by the user, making them ideal for this mitigation action focused on specific terms.

Detect and filter out information in the model’s responses that is not grounded in the provided source information: Contextual grounding checkThe contextual grounding check ensures that the model’s responses are based on the provided source information, filtering out ungrounded or hallucinated content. This matches the fourth mitigation action.

Hotspot Selection Analysis:

The hotspot lists four mitigation actions, each with the same dropdown options: "Select...," "Content filters," "Contextual grounding check," "Denied topics," and "Word filters." The correct selections are:

First action: Content filters

Second action: Denied topics

Third action: Word filters

Fourth action: Contextual grounding check

Each filter policy is used exactly once, as required, and aligns with Amazon Bedrock’s guardrail capabilities.

[References:, AWS Bedrock User Guide: Guardrails for Responsible AI (https://docs.aws.amazon.com/bedrock/latest/userguide/guardrails.html), AWS AI Practitioner Learning Path: Module on Responsible AI and Model Safety, Amazon Bedrock Developer Guide: Configuring Guardrails (https://aws.amazon.com/bedrock/), , , , ]