This question maps responsible AI principles to specific AI system practices as defined in AWS Responsible AI Guidelines and Amazon Bedrock Responsible AI documentation.

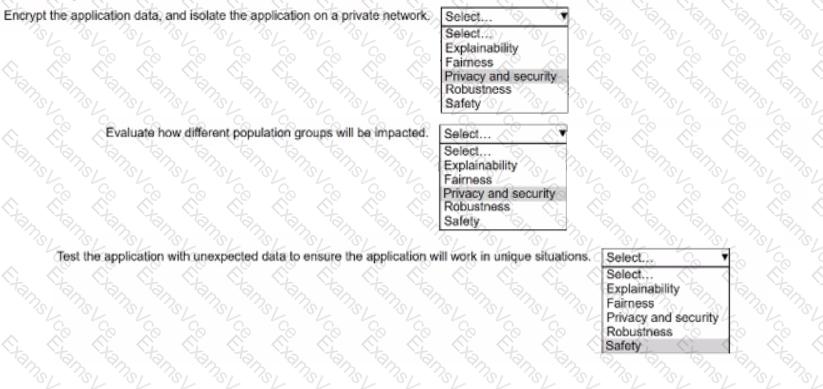

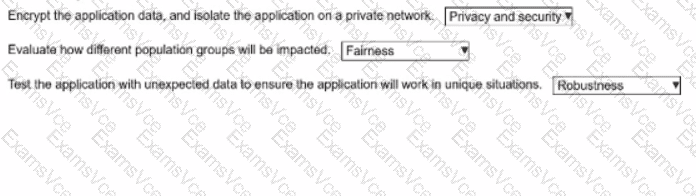

Scenario 1:

Encrypt the application data, and isolate the application on a private network.

→ Principle: Privacy and Security

From AWS documentation:

"Protecting user data through encryption, secure network isolation, and access control aligns with the Responsible AI principle of privacy and security. AWS recommends securing all data used by AI systems, both in transit and at rest, to maintain trust and regulatory compliance."

Scenario 2:

Evaluate how different population groups will be impacted.

→ Principle: Fairness

From AWS documentation:

"The fairness principle ensures that AI models do not discriminate or generate biased outcomes across population groups. Fairness assessment involves evaluating performance metrics across demographic segments and mitigating any bias detected."

Scenario 3:

Test the application with unexpected data to ensure the application will work in unique situations.

→ Principle: Robustness

From AWS documentation:

"Robustness refers to an AI system’s ability to maintain reliable performance under varied, noisy, or unexpected input conditions. Testing for robustness helps ensure the model generalizes well and behaves safely in edge cases."

Referenced AWS AI/ML Documents and Study Guides:

AWS Responsible AI Practices Whitepaper – Core Principles of Responsible AI

Amazon Bedrock Documentation – Responsible AI and Safety Controls

AWS Certified Machine Learning Specialty Guide – AI Governance and Model Evaluation